China Automotive Gesture Interaction Development Research Report,2022-2023

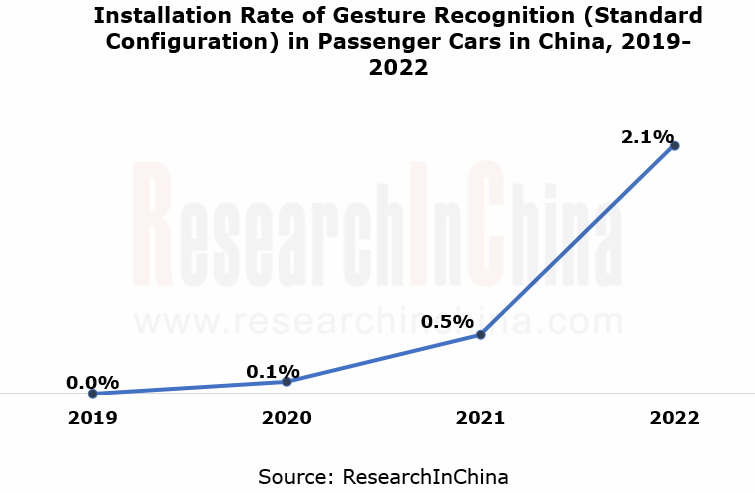

Vehicle gesture interaction research: in 2022, the installations rocketed by 315.6% year on year.

China Automotive Gesture Interaction Development Research Report, 2022-2023 released by ResearchInChina analyzes and studies four aspects: gesture interaction technology, benchmarking vehicle gesture interaction solutions, gesture interaction industry chain, and gesture interaction solution providers.

1. In 2022, the installations of vehicle gesture recognition functions soared by 315.6% on an annual basis.

Accompanied by iterative upgrade of intelligent cockpit technology, cockpit services are evolving from passive intelligence to active intelligence, and the human-computer interaction mode is also shifting from single-modal to multi-modal interaction. In this trend, vehicle gesture interaction functions enjoy a boom. In 2022, gesture recognition (standard configuration) was installed in 427,000 passenger cars in China, a year-on-year spurt of 315.6%, with installation rate up to 2.1%, 1.6 percentage points higher than 2021.

By brand, in 2022 Changan Automobile boasted the highest gesture recognition installation rate, up to 33.0%, 13.1 percentage points higher than 2021. In terms of models, in 2022, Changan Automobile had a total of 6 models (e.g., UNI-V, CS75 and UNI-K) equipped with gesture recognition as a standard configuration, 5 models more than in 2021.

The gesture recognition feature of Changan UNI-K adopts a 3D ToF solution, enabling such functions as song switch and navigation activation. The specific gestures are: swipe the palm horizontally to left/right for playing the previous/next song; make a finger heart for navigating back home; thumb up for navigating to the workplace.

2. The control scope of gesture recognition is extending from software to hardware, and from the inside to the outside of cars.

As gesture interaction technology gains popularity and finds application in ever more scenarios, vehicle gesture interaction also springs up. At present, automakers are working hard on layout of cockpit interaction functions. Gesture controlled functions have increased from initially in-vehicle infotainment system features (e.g., phone call, media volume and navigation), to body hardware and safety systems (e.g., windows/sunroof/sunshades, doors, and driving).

In addition, manufacturers also make efforts to develop exterior gesture control technology. One example is WEY Mocha that has allowed for gesture control over ignition, forward/backward movement, stop, and flameout outside the car. In the future, gesture recognition will no longer be limited to occupants, and will gradually cover actions of passers-by outside the car, for instance, recognizing command gestures of traffic police on road or gestures made by cyclists around the car.

3. Six gesture recognition technology routes.

From the perspective of technology route, gesture recognition technologies are led by 3D camera based structured light, ToF, stereo imaging, radar-based millimeter wave, ultrasonics, and bioelectricity-based myoelectricity.

In current stage, 3D camera based gesture sensing prevails among vehicle gesture recognition technology routes. The technology route consists of 3D camera and control unit. Composed of camera, infrared LED and sensor, the 3D camera is used to capture hand movements, and then recognize the type of gestures via corresponding image processing algorithms and issue relevant instructions. The 3D camera based technology route can be subdivided into structured light, ToF and stereo vision.

1. Structured light technology refers to a solution where the light with coded information is projected onto the human body, the infrared sensor collects the reflected structural pattern, and finally the processor builds a 3D model. With benefits of mature hardware, high recognition accuracy and high resolution, it is applicable to close-range scenarios within 10 meters. The gesture recognition carried by Neta S rolled out in July 2022 is a structured light solution.

The in-cabin gesture recognition sensor of Neta S is located above the interior rearview mirror. It can recognize 6 gestures, including: swipe the palm back and forth to adjust the light transmittance of the sunroof; make the "shh" sign for the silent mode; rotate a finger clockwise/counterclockwise to adjust the volume; move the palm to left and right to switch audio and video programs; make the "V" sign to take selfies in the car; thumb up to save favorite programs.

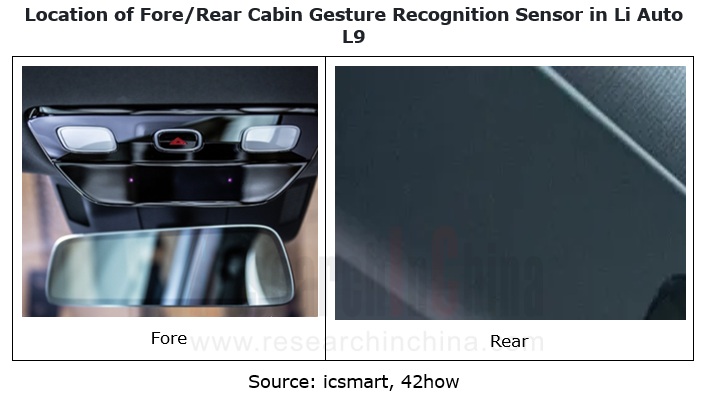

2. ToF technology, namely, time-of-flight (ToF) based ranging, is enabled with 3D images constructed by the underlying photosensitive elements. It can obtain effective and real-time depth information within 5 meters. With the applicability to a wider range of scenarios, it acquires effective depth of field information regardless of whether the ambient light is strong (e.g., sunlight) or weak. The gesture recognition solutions installed in production models like BMW iX, Li Auto L9 and new ARCFOX αS HI Edition are all ToF solutions.

The in-cabin gesture recognition sensor of BMW iX lies at the dome light above the center console screen. It can recognize 8 gestures, including:

① swipe hand to left and right to reject phone call/close the pop-up;

② point the index finger back and forth to answer phone call/confirm the pop-up;

③ rotate the index finger clockwise to turn the volume up or zoom in on the navigation map;

④ rotate the index finger counterclockwise to turn the volume down or zoom out on the navigation map;

⑤move a fist with thumb extended to left right back and forth to play the previous/next song;

⑥ point the index and middle fingers extended into the display to perform individually assignable gesture;

⑦ stretch out all five fingers, make a fist and then stretch out all five fingers again to perform individually assignable gesture;

⑧ bring thumb and index finger together and swipe the hand to the right or left for a view around the car (requiring the car to pack the automated parking assist system PLUS).

To ensure gesture recognition and control by occupants, Li Auto L9 has gesture recognition sensors installed in the fore cabin and rear cabin. The fore cabin sensor is located above the interior rearview mirror, and the rear one lies above the rear entertainment screen.

The fore cabin sensor can recognize 2 gestures, including:

①point towards windows/sunroof/sunshades to control (combined with voice interaction capability);

②make a fist and hold, and swipe up and down on the play page to adjust the volume.

The rear cabin sensor can recognize 7 gestures, including: ①stretch out all five fingers and place the inner side of elbow on the armrest for 2 seconds to activate the gesture control function; ②stretch out all five fingers and swipe the hand down to turn on the screen; ③stretch out all five fingers and swipe the hand to move the cursor; ④stretch out all five fingers and make a fist to spot the icon; ⑤stretch out all five fingers, make a fist and hold, and move the hand to share the content on the rear entertainment screen to the front display; ⑥stretch out all five fingers, make a fist and hold, and swipe the hand on the play page to left and right to adjust the play progress; ⑦stretch out all five fingers and swipe the hand up to exit the current content.

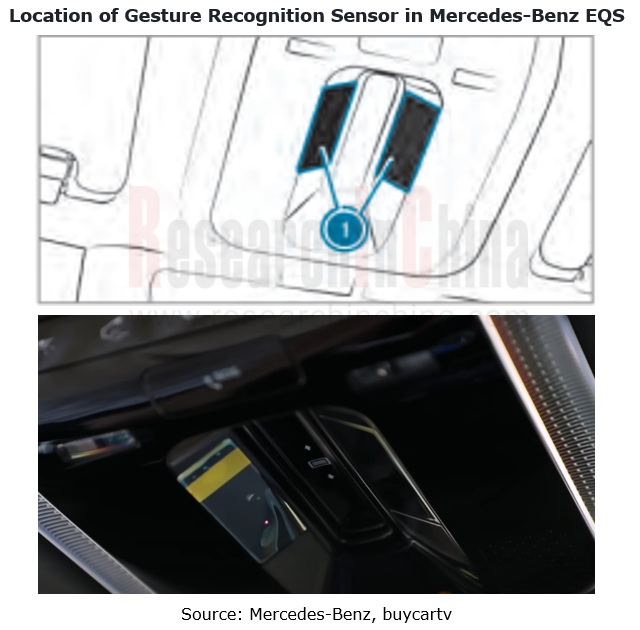

3. Stereo imaging technology based on the parallax principle is enabled with 3D geometric information of objects that is obtained from multiple images. This technology is a cost-effective solution posing low requirements for hardware and needing no additional special device. The gesture recognition solution carried by the Mercedes-Benz EQS launched in May 2022 is a stereo imaging solution.

The in-cabin gesture recognition sensor of Mercedes-Benz EQS is located at the reading light on the roof, and can recognize 3 gestures, including:

① make the "V" sign to call up favorites;

②swipe hand back and forth under the interior rearview mirror to control the sunroof;

③swipe hand toward the inside of the car to automatically close doors (requiring optional four-door electric switches).

Currently gesture recognition technologies such as radar-based millimeter wave, ultrasonics, and bioelectricity-based myoelectricity have yet to be used widely in in-cabin gesture recognition functions. Compared with conventional vision-based gesture recognition, these technologies still have some limitations and pose challenges.

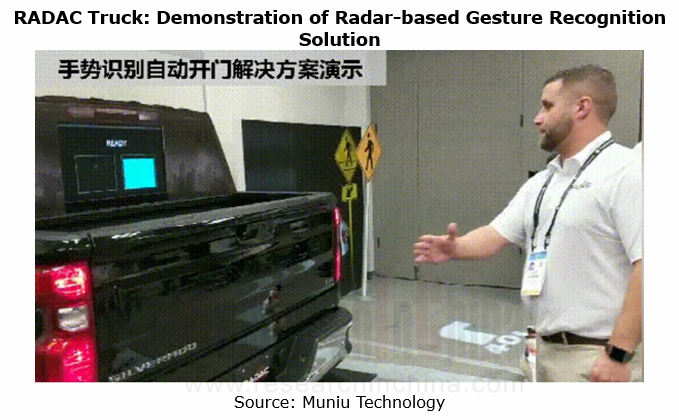

1. Radar is a radio wave sensor that enables accurate detection of the position and movements of hands even in the presence of obstacles.

In 2020, Ainstein, the American subsidiary of Muniu Technology, together with ADAC Automotive established a joint venture brand - RADAC. At the CES 2020, Ainstein introduced a radar–based vehicle gesture recognition solution. The gesture recognition sensor in this solution lies on the top of the tailgate, allowing users to open the door by swiping hand to left and right.

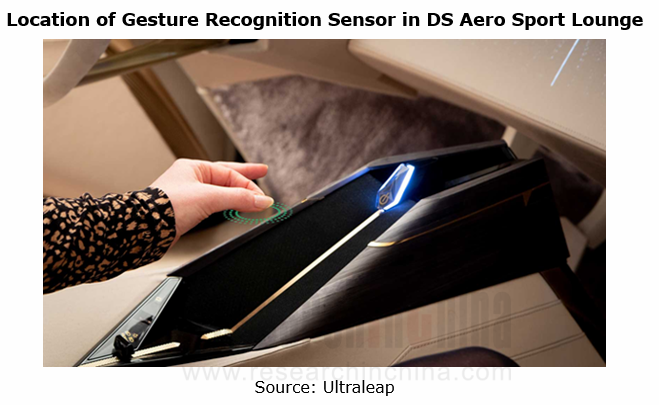

2. Ultrasonic radar. In February 2020, DS showcased the Aero Sport Lounge concept car at the Geneva International Motor Show. Integrating Leap Motion and Ultrahaptics technologies, this car can easily recognize and understand every gesture made by occupants, and give haptic feedback to them through the stereo ultrasonic waves emitted by the micro-speaker.

The in-cabin gesture recognition and ultrasonic feedback sensor of DS Aero Sport Lounge is located at the center armrest of the car, and can recognize 5 gestures, including:

①adjust in-cabin temperature and blowing velocity;

②adjust tracks and volume;

③process navigation/map, including new route settings;

④answer/reject phone calls;

⑤switch menu functions.

3. Bioelectricity refers to the electric signals generated by human muscular movements. Bioelectric sensors can recognize gestures and movements by measuring these signals. At present, the bioelectricity-based myoelectric gesture recognition technology is more used to control external devices and interaction interfaces, such as prosthetics, virtual reality and gaming devices. Thalmic Labs, a Canadian company dedicated to developing smart gesture control products, introduced the first wearable device, the MYO armband, which uses myoelectricity technology. The eight myoelectric sensors embedded in the armband record the electric signals of arm muscles, and recognize different gestures by analyzing these signals. In the actual application, users can control drones, computers, smartphones and other electronic devices through the Bluetooth connect of MYO. There are no vehicle use cases at present.

Automotive AI Foundation Model Technology and Application Trends Report, 2023-2024

Since 2023 ever more vehicle models have begun to be connected with foundation models, and an increasing number of Tier1s have launched automotive foundation model solutions. Especially Tesla’s big pr...

Qualcomm 8295 Based Cockpit Domain Controller Dismantling Analysis Report

ResearchInChina dismantled 8295-based cockpit domain controller of an electric sedan launched in December 2023, and produced the report SA8295P Series Based Cockpit Domain Controller Analysis and Dism...

Global and China Automotive Comfort System (Seating system, Air Conditioning System) Research Report, 2024

Automotive comfort systems include seating system, air conditioning system, soundproof system and chassis suspension to improve comfort of drivers and passengers. This report highlights seating system...

Automotive Memory Chip and Storage Industry Report, 2024

The global automotive memory chip market was worth USD4.76 billion in 2023, and it is expected to reach USD10.25 billion in 2028 boosted by high-level autonomous driving. The automotive storage market...

Automotive Memory Chip and Storage Industry Report, 2024

The global automotive memory chip market was worth USD4.76 billion in 2023, and it is expected to reach USD10.25 billion in 2028 boosted by high-level autonomous driving. The automotive storage market...

Automotive AUTOSAR Platform Research Report, 2024

AUTOSAR Platform research: the pace of spawning the domestic basic software + full-stack chip solutions quickens.

In the trend towards software-defined vehicles, AUTOSAR is evolving towards a more o...

China Passenger Car Electronic Control Suspension Industry Research Report, 2024

Research on Electronic Control Suspension: The assembly volume of Air Suspension increased by 113% year-on-year in 2023, and the magic carpet suspension of independent brands achieved a breakthrough

...

Global and China Hybrid Electric Vehicle (HEV) Research Report, 2023-2024

1. In 2025, the share of plug-in/extended-range hybrid electric passenger cars by sales in China is expected to rise to 40%.

In 2023, China sold 2.754 million plug-in/extended-range hybrid electric p...

L3/L4 Autonomous Driving and Startups Research Report, 2024

The favorable policies for the autonomous driving industry will speed up the commercialization of L3/L4.

In the second half of 2023, China introduced a range of policies concerning autonomous drivin...

Intelligent Vehicle Cockpit-driving Integration (Cockpit-driving-parking) Industry Report, 2024

At present, EEA is developing from the distributed type to domain centralization and cross-domain fusion. The trend for internal and external integration of domain controllers, especially the integrat...

Global and China Automotive Operating System (OS) Industry Report, 2023-2024

Chinese operating systems start to work hard In 2023, Chinese providers such as Huawei, Banma Zhixing, Xiaomi, and NIO made efforts in operating system market, launched different versions with competi...

Automotive RISC-V Chip Industry Research Report, 2024

Automotive RISC-V Research: Customized chips may become the future trend, and RISC-V will challenge ARM

What is RISC-V?Reduced Instruction Set Computing - Five (RISC-V) is an open standard instructio...

Passenger Car CTP (Cell to Pack), CTC (Cell To Chassis) and CTB (Cell to Body) Integrated Battery Industry Report, 2024

Passenger Car CTP, CTC and CTB Integrated Battery Industry Report, 2024 released by ResearchInChina summarizes and studies the status quo of CTP (Cell to Pack), CTC (Cell To Chassis) and CTB (Cell to ...

Software-defined Vehicle Research Report, 2023-2024 - Industry Panorama and Strategy

1. How to build intelligent driving software-defined vehicle (SDV) architecture?

The autonomous driving intelligent platform can be roughly divided into four parts from the bottom up: hardware platf...

Automotive DMS/OMS (Driver/Occupant Monitoring System) Research Report, 2023-2024

In-cabin Monitoring study: installation rate increases by 81.3% in first ten months of 2023, what are the driving factors?

ResearchInChina released "Automotive DMS/OMS (Driver/Occupant Monitoring Sys...

Automotive Functional Safety and Safety Of The Intended Functionality (SOTIF) Research Report, 2024

As intelligent connected vehicles boom, the change in automotive EEA has been accelerated, and the risks caused by electronic and electrical failures have become ever higher. As a result, functional s...

Autonomous Driving Map Industry Report,2024

As the supervision of HD map qualifications tightens, issues such as map collection cost, update frequency, and coverage stand out. Amid the boom of urban NOA, the "lightweight map" intelligent drivin...

Automotive Vision Industry Research Report, 2023

From January to September 2023, 48.172 million cameras were installed in new cars in China, a like-on-like jump of 34.1%, including:

9.209 million front view cameras, up 33.0%; 3.875 million side vi...